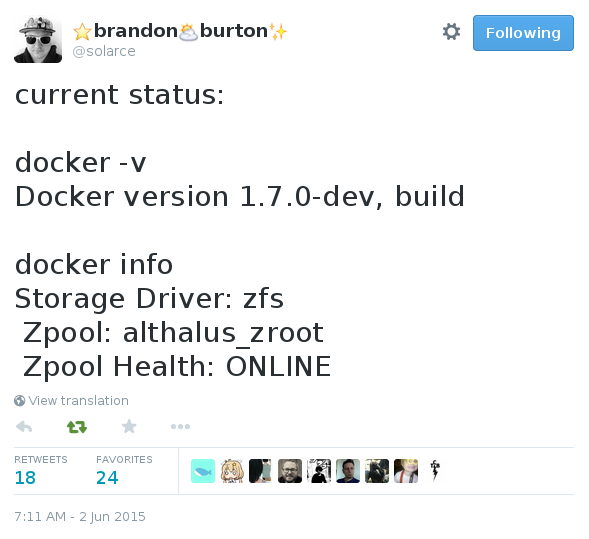

class: center, middle # Deep dive into # Docker storage drivers ## * ## Jérôme Petazzoni - @jpetazzo ## Docker - @docker --- # Who am I? - [@jpetazzo](https://twitter.com/jpetazzo) - Tamer of Unicorns and Tinkerer Extraordinaire.red[¹] - Grumpy French DevOps person who loves Shell scripts <br/> .small[Go Away Or I Will Replace You Wiz Le Very Small Shell Script] - *Some* experience with containers <br/> (built and operated the dotCloud PaaS) .footnote[.red[¹] At least one of those is actually on my business card] --- # Outline - Extremely short intro to Docker - Copy-on-write - History of Docker storage drivers - AUFS, BTRFS, Device Mapper, Overlay.gray[fs], VFS, ZFS - Conclusions --- class: center, middle # Extremely short intro to Docker --- # What's Docker? - A *platform* made of the *Docker Engine* and the *Docker Hub* - The *Docker Engine* is a runtime for containers - It's Open Source, and written in Go <br/> .small[.small[ http://www.slideshare.net/jpetazzo/docker-and-go-why-did-we-decide-to-write-docker-in-go ]] - It's a daemon, controlled by a REST.gray[-ish] API - *What is this, I don't even?!?* <br/> Check the recording of this online "Docker 101" session: <br/> https://www.youtube.com/watch?v=pYZPd78F4q4 --- # If you've never seen Docker in action ... This will help! .small[ ``` jpetazzo@tarrasque:~$ docker run -ti python bash root@75d4bf28c8a5:/# pip install IPython Downloading/unpacking IPython Downloading ipython-2.3.1-py3-none-any.whl (2.8MB): 2.8MB downloaded Installing collected packages: IPython Successfully installed IPython Cleaning up... root@75d4bf28c8a5:/# ipython Python 3.4.2 (default, Jan 22 2015, 07:33:45) Type "copyright", "credits" or "license" for more information. IPython 2.3.1 -- An enhanced Interactive Python. ? -> Introduction and overview of IPython's features. %quickref -> Quick reference. help -> Python's own help system. object? -> Details about 'object', use 'object??' for extra details. In [1]: ``` ] --- # What happened here? - We created a *container* (~lightweight virtual machine), <br/> with its own: - filesystem (based initially on a `python` image) - network stack - process space - We started with a `bash` process <br/> (no `init`, no `systemd`, no problem) - We installed IPython with pip, and ran it --- # What did .underline[not] happen here? - We did not make a full copy of the `python` image The installation was done in the *container*, not the *image*: - We did not modify the `python` image itself - We did not affect any other container <br/> (currently using this image or any other image) --- # How is this important? - We used a *copy-on-write* mechanism <br/> (Well, Docker took care of it for us) - Instead of making a full copy of the `python` image, keep track of changes between this image and our container - Huge disk space savings (1 container = less than 1 MB) - Huge time savings (1 container = less than 0.1s to start) --- class: center, middle # Copy-on-write --- # What is copy-on-write? *It's a mechanism allowing to share data.* *The data appears to be a copy, but is only a link (or reference) to the original data.* *The actual copy happens only when someone tries to change the shared data.* *Whoever changes the shared data ends up sharing their own copy instead.* --- # A few metaphors -- - First metaphor: <br/>white board and tracing paper -- - Second metaphor: <br/>magic books with shadowy pages -- - Third metaphor: <br/>just-in-time house building --- # How does it work? --  -- (More about this in a few minutes!) --- # Copy-on-write is *everywhere* - Process creation with `fork()` - Consistent disk snapshots - Efficient VM provisioning - CONTAINERS <br/>(building, shipping, running thereof) --- # Process creation - On virtually all UNIX systems, <br/>the only way.red[*] to create a new process <br/>is by making a copy of an existing process - This has to be fast <br/>(even if the process uses many GBs of RAM) - See `fork()` and `clone()` system calls .footnote[.red[*] Except for the first process (PID 1)] --- # Memory snapshots - Example: Redis `SAVE` command - Achieves *consistent* snapshot - Implementation relies on `fork()` - One "copy" continues to serve requests, <br/>the other writes memory to disk and exits --- # Mapped memory - Load a file in memory instantaneously! <br/>(even if it's huge and doesn't fit in RAM) - Data is loaded on demand from disk - Writes to memory can be: - visible just by us (copy-on-write) - committed to disk See: `mmap()` --- # How does it work? - Thanks to the MMU! (Memory Management Unit) - Each memory access goes through it - Translates memory accesses (location.red[¹] + operation.red[²]) into: - actual physical location - or, alternatively, a *page fault* .footnote[ .red[¹] Location = address = pointer .red[²] Operation = read, write, or exec ] --- # Memory behaviors - Read to private data = OK - Read to shared data = OK - Write to private data = OK - Write to shared data = *page fault* - the MMU notifies the OS - the OS makes a copy of the shared data - the OS informs the MMU that the copy is private - the write operation resumes as if nothing happened --- # Other scenarios - Access to non-existent memory area = `SIGSEGV`/`SIGBUG` <br/>.small[(a.k.a. "Segmentation fault"/"Bus error" a.k.a. "Go and learn to use pointers")] - Access to swapped-out memory area = load it from disk <br/>.small[(a.k.a. "My program is now 1000x slower")] - Write attempt to code area / exec attempt to non-code area: <br/>kill the process (sometimes) <br/>.small[(prevents some attacks, but also messes with JIT compilers)] Note: one "page" is typically 4 KB. --- # Consistent disk snapshots - "I want to make a backup of a busy database" - I don't want to stop the database during the backup - the backup must be *consistent* (single point in time) - Initially available (I think) on external storage (NAS, SAN), <br/>because/therefore: - it's complicated - it's expensive --- # Thin provisioning for VMs.red[¹] - Put system image on copy-on-write storage - For each machine.red[¹], create copy-on-write instance - If the system image contains a lot of useful software, people will almost never need to install extra stuff - Each extra machine will only need disk space for data! WIN $$$ (And performance, too, because of caching) .footnote[.small[.red[¹] Not only VMs, but also physical machines with netboot, and containers!]] --- # Copy-on-write spreads everywhere (In no specific order; non-exhaustive list) - LVM (Logical Volume Manager) on Linux - ZFS on Solaris, then FreeBSD, Linux ... - BTRFS on Linux - AUFS, UnionMount, overlay.gray[fs] ... - Virtual disks in VM hypervisors <br/>(qcow, qcow2, VirtualBox/VMware equivalents, EBS...) --- # Copy-on-write and Docker: a love story - Without copy-on-write... - it would take forever to start a container - containers would use up a lot of space - Without copy-on-write "on your desktop"... - Docker would not be usable on your Linux machine - There would be no Docker at all. <br/>And no meet-up here tonight. <br/>And we would all be shaving yaks instead. <br/>☹ --- class: center, middle # Thank you: Junjiro R. Okajima (and other AUFS contributors) Chris Mason (and other BTRFS contributors) Jeff Bonwick, Matt Ahrens (and other ZFS contributors) Miklos Szeredi (and other overlay.gray[fs] contributors) The many contributors to Linux device mapper, thinp target, etc. .small[... And all the other giants whose shoulders we're sitting on top of, basically] --- class: center, middle # History of Docker storage drivers --- # First came AUFS - Docker used to be dotCloud <br/>(PaaS, like Heroku, Cloud Foundry, OpenShift...) - dotCloud started using AUFS in 2008 <br/>(with vserver, then OpenVZ, then LXC) - Great fit for high density, PaaS applications <br/>(More on this later!) --- # AUFS is not perfect - Not in mainline kernel - Applying the patches used to be *exciting* - ... especially in combination with GRSEC - ... and other custom fancery like `setns()` --- # But some people believe in AUFS! - dotCloud, obviously - Debian and Ubuntu use it in their default kernels, <br/>for Live CD and similar use cases: - Your root filesystem is a copy-on-write between <br/>- the read-only media (CD, DVD...) <br/>- and a read-write media (disk, USB stick...) - As it happens, we also .red[♥] Debian and Ubuntu very much - First version of Docker is targeted at Ubuntu (and Debian) --- # Then, some people started to believe in Docker - Red Hat users *demanded* Docker on their favorite distro - Red Hat Inc. wanted to make it happen - ... and contributed support for the Device Mapper driver - ... then the BTRFS driver - ... then the overlay.gray[fs] driver .footnote[Note: other contributors also helped tremendously!] --- class: center, middle # Special thanks: Alexander Larsson Vincent Batts \+ all the other contributors and maintainers, of course .small[(But those two guys have played an important role in the initial support, then maintenance, of the BTRFS, Device Mapper, and overlay drivers. Thanks again!)] --- class: center, middle # Let's see each # storage driver # in action --- class: center, middle # AUFS --- # In Theory - Combine multiple *branches* in a specific order - Each branch is just a normal directory - You generally have: - at least one read-only branch (at the bottom) - exactly one read-write branch (at the top) (But other fun combinations are possible too!) --- # When opening a file... - With `O_RDONLY` - read-only access: - look it up in each branch, starting from the top - open the first one we find - With `O_WRONLY` or `O_RDWR` - write access: - look it up in the top branch; <br/>if it's found here, open it - otherwise, look it up in the other branches; <br/>if we find it, copy it to the read-write (top) branch, <br/>then open the copy .footnote[That "copy-up" operation can take a while if the file is big!] --- # When deleting a file... - A *whiteout* file is created <br/>(if you know the concept of "tombstones", this is similar) .small[ ``` # docker run ubuntu rm /etc/shadow # ls -la /var/lib/docker/aufs/diff/$(docker ps --no-trunc -lq)/etc total 8 drwxr-xr-x 2 root root 4096 Jan 27 15:36 . drwxr-xr-x 5 root root 4096 Jan 27 15:36 .. -r--r--r-- 2 root root 0 Jan 27 15:36 .wh.shadow ``` ] --- # In Practice - The AUFS mountpoint for a container is <br/>`/var/lib/docker/aufs/mnt/$CONTAINER_ID/` - It is only mounted when the container is *running* - The AUFS branches (read-only and read-write) are in <br/>`/var/lib/docker/aufs/diff/$CONTAINER_OR_IMAGE_ID/` - All writes go to `/var/lib/docker` ``` dockerhost# df -h /var/lib/docker Filesystem Size Used Avail Use% Mounted on /dev/xvdb 15G 4.8G 9.5G 34% /mnt ``` --- # Under the hood - To see details about an AUFS mount: - look for its internal ID in `/proc/mounts` - look in `/sys/fs/aufs/si_.../br*` - each branch (except the two top ones) <br/>translates to an image --- # Example .small[ ``` dockerhost# grep c7af /proc/mounts none /mnt/.../c7af...a63d aufs rw,relatime,si=2344a8ac4c6c6e55 0 0 dockerhost# grep . /sys/fs/aufs/si_2344a8ac4c6c6e55/br[0-9]* /sys/fs/aufs/si_2344a8ac4c6c6e55/br0:/mnt/c7af...a63d=rw /sys/fs/aufs/si_2344a8ac4c6c6e55/br1:/mnt/c7af...a63d-init=ro+wh /sys/fs/aufs/si_2344a8ac4c6c6e55/br2:/mnt/b39b...a462=ro+wh /sys/fs/aufs/si_2344a8ac4c6c6e55/br3:/mnt/615c...520e=ro+wh /sys/fs/aufs/si_2344a8ac4c6c6e55/br4:/mnt/8373...cea2=ro+wh /sys/fs/aufs/si_2344a8ac4c6c6e55/br5:/mnt/53f8...076f=ro+wh /sys/fs/aufs/si_2344a8ac4c6c6e55/br6:/mnt/5111...c158=ro+wh dockerhost# docker inspect --format {{.Image}} c7af b39b81afc8cae27d6fc7ea89584bad5e0ba792127597d02425eaee9f3aaaa462 dockerhost# docker history -q b39b b39b81afc8ca 615c102e2290 837339b91538 53f858aaaf03 511136ea3c5a ``` ] --- # Performance, tuning - AUFS `mount()` is fast, so creation of containers is quick - Read/write access has native speeds - But initial `open()` is expensive in two scenarios: - when writing big files (log files, databases ...) - with many layers + many directories in `PATH` <br/>(dynamic loading, anyone?) - Protip: when we built dotCloud, we ended up putting all important data on *volumes* - When starting the same container 1000x, the data is loaded only once from disk, and cached only once in memory (but `dentries` will be duplicated) --- class: center, middle # Device Mapper --- # Preamble - Device Mapper is a complex subsystem; it can do: - RAID - encrypted devices - snapshots (i.e. copy-on-write) - and some other niceties - In the context of Docker, "Device Mapper" means <br/>"the Device Mapper system + its *thin provisioning target*" <br/>(sometimes noted "thinp") --- # In theory - Copy-on-write happens on the *block* level <br/>(instead of the *file* level) - Each container and each image gets its own block device - At any given time, it is possible to take a snapshot: - of an existing container (to create a frozen image) - of an existing image (to create a container from it) - If a block has never been written to: - it's assumed to be all zeros - it's not allocated on disk <br/>(hence "thin" provisioning) --- # In practice - The mountpoint for a container is <br/>`/var/lib/docker/devicemapper/mnt/$CONTAINER_ID/` - It is only mounted when the container is *running* - The data is stored in two files, "data" and "metadata" <br/>(More on this later) - Since we are working on the block level, there is not much visibility on the diffs between images and containers --- # Under the hood - `docker info` will tell you about the state of the pool <br/>(used/available space) - List devices with `dmsetup ls` - Device names are prefixed with `docker-MAJ:MIN-INO` .small[MAJ, MIN, and INO are derived from the block major, block minor, and inode number where the Docker data is located (to avoid conflict when running multiple Docker instances, e.g. with Docker-in-Docker)] - Get more info about them with `dmsetup info`, `dmsetup status` <br/>(you shouldn't need this, unless the system is badly borked) - Snapshots have an internal numeric ID - `/var/lib/docker/devicemapper/metadata/$CONTAINER_OR_IMAGE_ID` <br/>is a small JSON file tracking the snapshot ID and its size --- <!-- # Example --> # Extra details - Two storage areas are needed: <br/>one for *data*, another for *metadata* - "data" is also called the "pool"; it's just a big pool of blocks <br/>(Docker uses the smallest possible block size, 64 KB) - "metadata" contains the mappings between virtual offsets (in the snapshots) and physical offsets (in the pool) - Each time a new block (or a copy-on-write block) is written, a block is allocated from the pool - When there are no more blocks in the pool, attempts to write will stall until the pool is increased (or the write operation aborted) --- # Performance - By default, Docker puts data and metadata on a loop device backed by a sparse file - This is great from a usability point of view <br/>(zero configuration needed) - But terrible from a performance point of view: - each time a container writes to a new block, - a block has to be allocated from the pool, - and when it's written to, - a block has to be allocated from the sparse file, - and sparse file performance isn't great anyway --- # Tuning - Do yourself a favor: if you use Device Mapper, <br/>put data (and metadata) on real devices! - stop Docker - change parameters - wipe out `/var/lib/docker` (important!) - restart Docker .small[ ``` docker -d --storage-opt dm.datadev=/dev/sdb1 --storage-opt dm.metadatadev=/dev/sdc1 ``` ] --- # More tuning - Each container gets its own block device - with a real FS on it - So you can also adjust (with `--storage-opt`): - filesystem type - filesystem size - `discard` (more on this later) - Caveat: when you start 1000x containers, <br/>the files will be loaded 1000x from disk! --- # See also .small[ - https://www.kernel.org/doc/Documentation/device-mapper/thin-provisioning.txt - https://github.com/docker/docker/tree/master/daemon/graphdriver/devmapper - http://en.wikipedia.org/wiki/Sparse_file - http://en.wikipedia.org/wiki/Trim_%28computing%29 ] --- class: center, middle # BTRFS --- # In theory - Do the whole "copy-on-write" thing at the filesystem level - Create.red[¹] a "subvolume" (imagine `mkdir` with Super Powers) - Snapshot.red[¹] any subvolume at any given time - BTRFS integrates the snapshot and block pool management features at the filesystem level, instead of the block device level .footnote[.red[¹] This can be done with the `btrfs` tool.] --- # In practice - `/var/lib/docker` has to be on a BTRFS filesystem! - The BTRFS mountpoint for a container or an image is <br/>`/var/lib/docker/btrfs/subvolumes/$CONTAINER_OR_IMAGE_ID/` - It should be present even if the container is not running - Data is not written directly, it goes to the journal first <br/>(in some circumstances.red[¹], this will affect performance) .footnote[.red[¹] E.g. uninterrupted streams of writes. <br/>The performance will be half of the "native" performance.] --- # Under the hood - BTRFS works by dividing its storage in *chunks* - A chunk can contain meta or metadata - You can run out of chunks (and get `No space left on device`) <br/>even though `df` shows space available <br/>(because the chunks are not full) - Quick fix: ``` # btrfs filesys balance start -dusage=1 /var/lib/docker ``` --- <!-- # Example --> # Performance, tuning - Not much to tune - Keep an eye on the output of `btrfs filesys show`! This filesystem is doing fine: .small[ ``` # btrfs filesys show Label: none uuid: 80b37641-4f4a-4694-968b-39b85c67b934 Total devices 1 FS bytes used 4.20GiB devid 1 size 15.25GiB used 6.04GiB path /dev/xvdc ``` ] This one, however, is full (no free chunk) even though there is not that much data on it: .small[ ``` # btrfs filesys show Label: none uuid: de060d4c-99b6-4da0-90fa-fb47166db38b Total devices 1 FS bytes used 2.51GiB devid 1 size 87.50GiB used 87.50GiB path /dev/xvdc ``` ] --- class: center, middle # Overlay.gray[fs] --- # Preamble What with the grayed .gray[fs]? - It used to be called (and have filesystem type) `overlayfs` - When it was merged in 3.18, this was changed to `overlay` --- # In theory - This is just like AUFS, with minor differences: - only two branches (called "layers") - but branches can be overlays themselves --- # In practice - You need kernel 3.18 - On Ubuntu.red[¹]: - go to http://kernel.ubuntu.com/~kernel-ppa/mainline/ - locate the most recent directory, e.g. `v3.18.4-vidi` - download the `linux-image-..._amd64.deb` file - `dpkg -i` that file, reboot, enjoy .footnote[.red[¹] Adapatation to other distros left as an exercise for the reader. ] --- # Under the hood - Images and containers are materialized under <br/>`/var/lib/docker/overlay/$ID_OF_CONTAINER_OR_IMAGE` - Images just have a `root` subdirectory <br/>(containing the root FS) - Containers have: - `lower-id` → file containing the ID of the image - `merged/` → mount point for the container .small[(when running)] - `upper/` → read-write layer for the container - `work/` → temporary space used for atomic copy-up --- <!-- # Example --> # Performance, tuning - Implementation detail: <br/>identical files are hardlinked between images <br/>(this avoids doing composed overlays) - Not much to tune at this point - Performance should be slightly better than AUFS: - no `stat()` explosion - good memory use - slow copy-up, still (nobody's perfect) --- class: center, middle # VFS --- # In theory - No copy on write. Docker does a full copy each time! - Doesn't rely on those fancy-pesky kernel features - Good candidate when porting Docker to new platforms <br/>(think FreeBSD, Solaris...) - Space .underline[in]efficient, slow --- # In practice - Might be useful for production setups (If you don't want / cannot use volumes, and don't want / cannot use any of the copy-on-write mechanisms!) --- class: center, middle # ZFS -- ### (Coming Soon To A Docker Release Near You) -- ### (i.e. it is in 1.7-RC) -- ## WOOT! --- class: middle, center  --- # Sneak peek - Obviously you need to setup ZFS yourself - *Should* be compatible with all flavors <br/>(kernel-based and FUSE) - Want to play with it? <br/>https://master.dockerproject.com/ --- # From a user POV... - Similar to BTRFS - Fast snapshot, fast creation, fast snapshot... - Epic performance and reliability (YMMV) - ZFS has the reputation to be quite Memory hungry <br/>(=you probably don't want that in a tiny VM) --- # Implementation details - Shells out to `zfs-utils` - Why not `libzfs`? <br/>Because it's tied to a specific kernel version - A Tale Of Two Pull Requests --- class: center, middle # Conclusions --- class: center, middle *The nice thing about Docker storage drivers, <br/>is that there are so many of them to choose from.* --- # What do, what do? - If you do PaaS or other high-density environment: - AUFS (if available on your kernel) - overlayfs (otherwise) - If you put big writable files on the CoW filesystem: - BTRFS, ZFS, or Device Mapper (pick the one you know best) - *Wait, really, you want me to pick one!?!* --- class: center, middle # Bottom line --- class: center, middle *The best storage driver to run your production <br/>will be the one with which you and your team <br/>have the most extensive operational experience.* --- class: center, middle # Bonus track ## `discard` and `TRIM` --- # `TRIM` - Command sent to a SSD disk, to tell it: <br/>*"that block is not in use anymore"* - Useful because on SSD, *erase* is very expensive (slow) - Allows the SSD to pre-erase cells in advance <br/>(rather than on-the-fly, just before a *write*) - Also meaningful on copy-on-write storage <br/>(if/when every snapshots as trimmed a block, it can be freed) --- # `discard` - Filesystem option meaning: <br/>*"can I has `TRIM` on this pls"* - Can be enabled/disabled at any time - Filesystem can also be trimmed manually with `fstrim` <br/>(even while mounted) --- # The `discard` quandary - `discard` works on Device Mapper + loopback devices - ... but is particularly slow on loopback devices <br/>(the loopback file needs to be "re-sparsified" after container or image deletion, and this is a slow operation) - You can turn it on or off depending on your preference --- class: center, middle # That's all folks! --- class: center, middle # Questions?